Taking the Fight to Cybercriminals: How Offensive Cybersecurity Can Bolster Your Defenses

February 13, 2024

Deepfakes Emerge as New Threat in Mobile Banking Malware Attacks

February 16, 2024Microsoft and OpenAI have recently issued a joint warning about hacking groups increasingly using artificial intelligence (AI) in their cyberattacks. This raises serious concerns about the potential misuse of AI for malicious purposes and the need for robust cybersecurity measures.

What AI Tools Are Hackers Using?

The report highlights that hackers are memanfaatkan various AI tools, including:

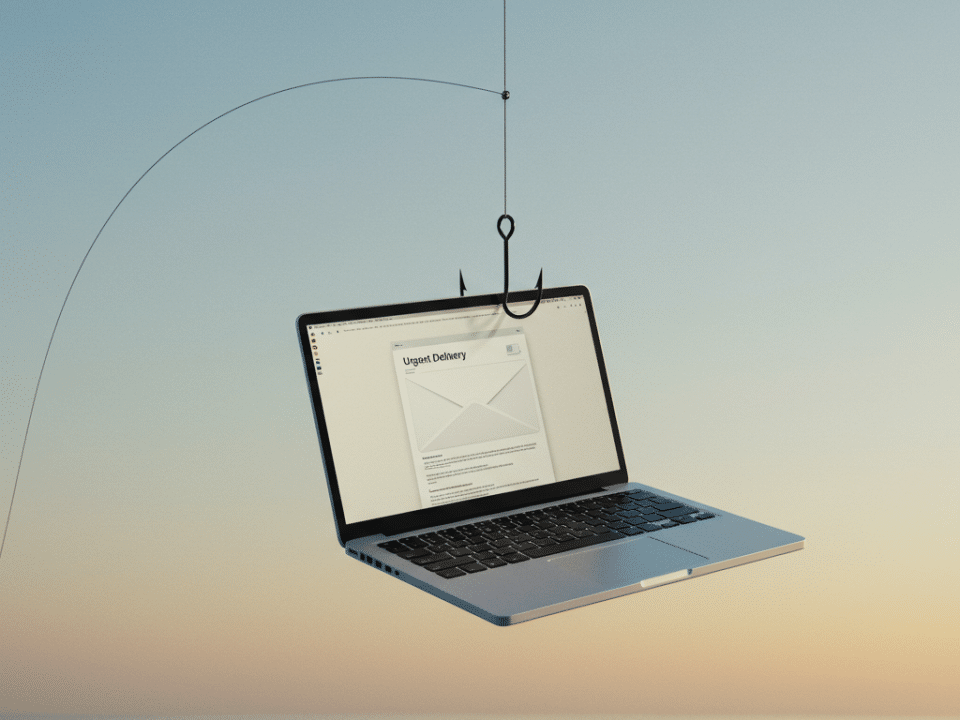

- Large language models (LLMs): These are powerful AI systems trained on massive amounts of text data, enabling them to generate human-quality text, translate languages, and write different kinds of creative content. Hackers can exploit LLMs to create phishing emails, craft social engineering attacks, and even develop malware.

- Machine learning (ML): This technology allows computers to learn and improve without explicit programming. Hackers can use ML algorithms to automate tasks like vulnerability scanning, password cracking, and even launching distributed denial-of-service (DDoS) attacks.

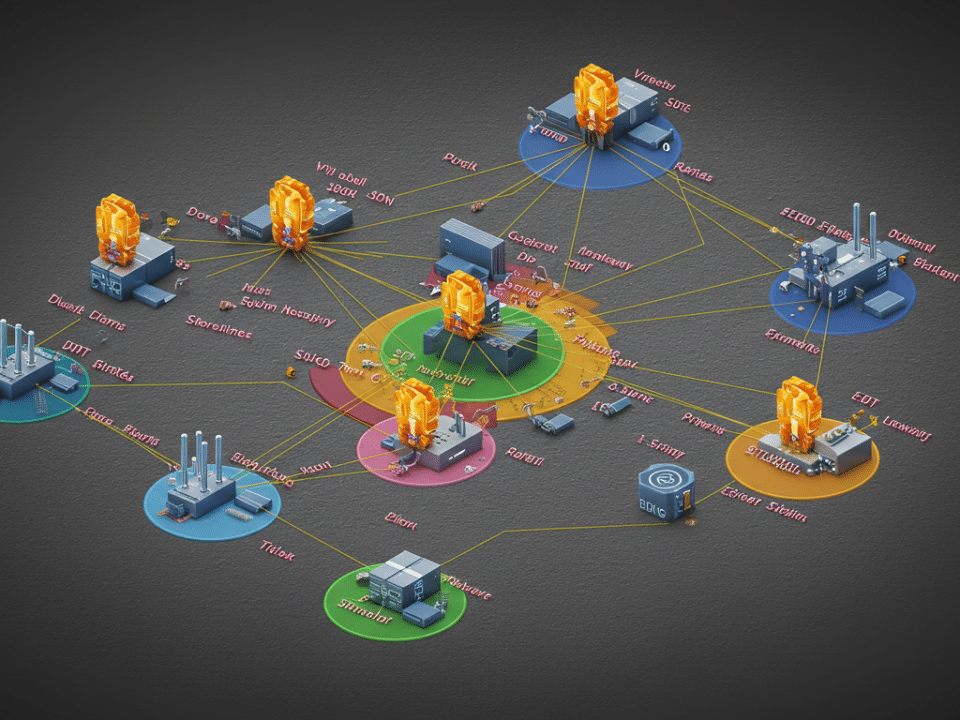

- AI-powered reconnaissance: Hackers can leverage AI to gather intelligence on potential targets, such as identifying vulnerabilities in systems, exploring network structures, and even predicting human behavior patterns.

What Are the Implications?

The use of AI in cyberattacks presents several challenges:

- Increased Attack Sophistication: AI can automate tasks, making attacks faster and more efficient. It can also personalize attacks, making them more targeted and difficult to detect.

- Evolving Threat Landscape: As AI technology continues to develop, hackers will likely find new and innovative ways to misuse it. This necessitates constant vigilance and adaptation from cybersecurity professionals.

- Attribution Challenges: AI-powered attacks can be difficult to trace back to their source, making it harder to hold perpetrators accountable.

What Can We Do?

Despite these challenges, there are steps we can take to mitigate the risks posed by AI-powered cyberattacks:

- Raise awareness: Educating the public and organizations about the potential misuse of AI is crucial. This includes understanding how AI works and the red flags to watch out for.

- Develop robust cybersecurity defenses: Organizations need to implement comprehensive cybersecurity measures, including firewalls, intrusion detection systems, and regular security audits.

- Promote responsible AI development: Developers and researchers must prioritize ethical considerations and build safeguards to prevent AI from being used for malicious purposes.

- Foster international collaboration: Governments and cybersecurity agencies worldwide need to collaborate to share information, develop best practices, and coordinate efforts to combat AI-powered cyber threats.

Conclusion

The use of AI by hacking groups is a worrying trend, but it is not insurmountable. By working together, we can develop the necessary safeguards and awareness to mitigate these risks and ensure that AI is used for good, not harm.

#AI #cybersecurity #hacking #machinelearning #LLMs #cyberattacks #threatlandscape #ethicalAI