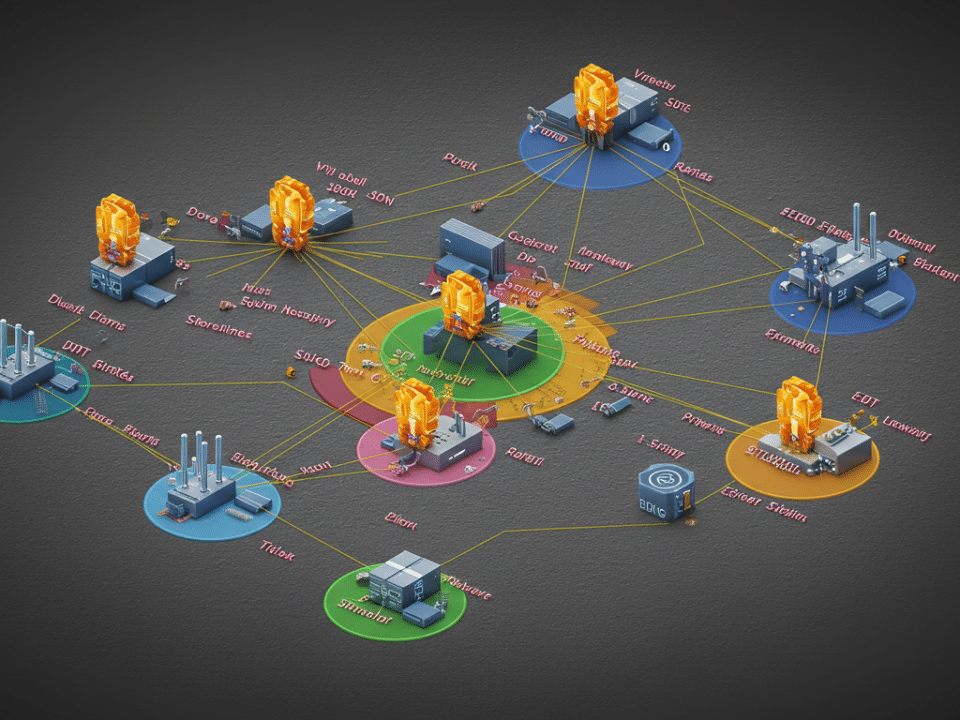

The Silent Threat Within: Why OT Cybersecurity Needs Your Urgent Attention

June 11, 2024

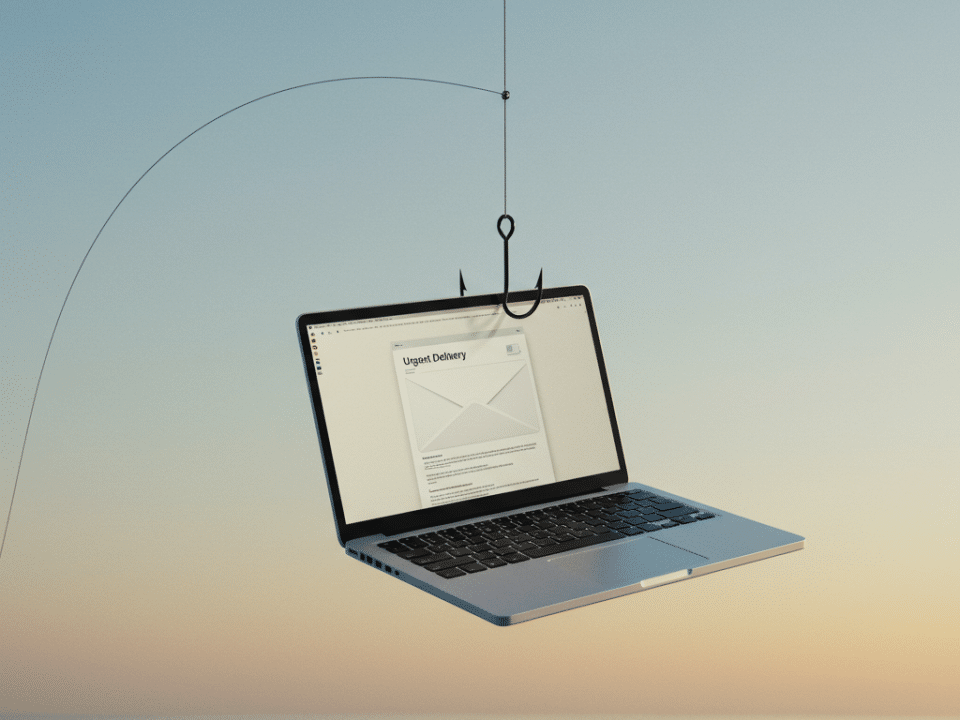

Phishing Gets an App Makeover: How PWAs Are Stealing Your Login Credentials

June 14, 2024Artificial intelligence (AI) is revolutionizing the way we interact with technology. From composing captivating content to generating creative ideas, large language models (LLMs) like ChatGPT are becoming increasingly sophisticated tools. But as a cybersecurity expert, I approach these advancements with a healthy dose of caution. This blog post by Krypto IT explores the potential risks of oversharing with LLMs and offers practical tips for secure interactions in the exciting world of AI.

The Double-Edged Sword of AI: Power and Peril

LLMs excel at processing massive amounts of data and identifying patterns. This allows them to generate realistic text formats, translate languages, and even write different kinds of creative content. However, the very power of LLMs can also pose security risks:

- Data Privacy Concerns: The training data used to develop LLMs can contain sensitive information. Sharing confidential details with an LLM could inadvertently expose this information, especially if the model or its training data is compromised.

- Unintentional Bias: LLMs trained on biased data can perpetuate those biases in their outputs. Sharing sensitive information could inadvertently reinforce these biases and lead to discriminatory or harmful results.

- Security Through Obfuscation: Cybersecurity often relies on keeping certain information confidential, like exploit details or specific attack methods. Sharing this type of information with an LLM could potentially make it easier for malicious actors to develop new cyber threats.

Sharing Wisely: A Cybersecurity Expert’s Tips for Safe Interactions with LLMs

While caution is necessary, we don’t have to avoid LLMs altogether. Here’s how to interact with them securely:

- Think Before You Share: Critically evaluate the information you’re considering sharing with an LLM. Is it publicly available knowledge, or is it confidential or sensitive?

- Maintain a Healthy Dose of Skepticism: Don’t assume the information generated by an LLM is always accurate. Verify facts and conduct your own research before relying on LLM outputs.

- Beware of Social Engineering Tactics: Malicious actors could potentially use LLMs to create more advanced phishing scams or social engineering attacks. Remain vigilant and don’t share personal or financial information based on unsolicited LLM interactions.

Krypto IT: Your Partner in a Secure AI Future

At Krypto IT, we believe AI has the potential to be a powerful tool for good. We also understand the importance of cybersecurity in the age of AI. We offer a comprehensive suite of solutions to help businesses leverage AI securely:

- Data Security Assessments: We identify and address vulnerabilities in your data storage and processing systems to minimize the risk of sensitive information exposure through LLMs.

- Security Awareness Training: We train your employees on the potential risks of sharing with LLMs and how to interact with these models securely.

- Penetration Testing: We simulate cyberattacks to identify weaknesses in your systems and ensure they are adequately protected against potential misuse of LLM-generated information by malicious actors.

Embrace the Future, But Stay Secure!

LLMs represent exciting advancements in AI, but cybersecurity must remain a top priority. Partner with Krypto IT to leverage AI securely and unlock its full potential for your business. Contact us today for a free consultation and learn how we can help you navigate the exciting, yet cautious, world of AI!

#AI #cybersecurity #LLMs #dataprivacy #infosec #securityawareness #newbusiness #consultation

P.S. Feeling unsure about sharing with AI? Let’s chat about building a secure and responsible AI strategy for your organization!